Camera1架构和ASD流程源码分析

相关文件路径

Java层:

/vendor/mediatek/proprietary/packages/apps/Camera/src/

- /com/mediatek/camera/AdditionManager.java

- /com/mediatek/camera/addition/Asd.java

- /com/android/camera/CameraManager.java

- /com/android/camera/AndroidCamera.java

framework层:

/frameworks/av/camera/Camera.cpp

/frameworks/av/camera/CameraBase.cpp

/frameworks/av/services/camera/libcameraservice/api1/CameraClient.cpp

/frameworks/av/services/camera/libcameraservice/mediatek/api1/CameraClient.cpp

/frameworks/base/core/java/android/hardware/Camera.javajni层:

/frameworks/base/core/jni/android_hardware_Camera.cppHardware层:

vendor/mediatek/proprietary/hardware/mtkcam/

- legacy/platform/mt6735/core/featureio/pipe/aaa/Hal3AAdapter1.cpp

- legacy/platform/mt6735/core/featureio/pipe/asd/asd_hal_base.cpp

- legacy/platform/mt6735/core/featureio/pipe/asd/asd/asd_hal.cpp

- legacy/platform/mt6735/v1/client/CamClient/FD/Asd/AsdClient.cpp

- legacy/platform/mt6735/v1/client/CamClient/FD/FDClient.Thread.cpp

- main/hal/device1/common/Cam1DeviceBase.cpp

- legacy/v1/client/CamClient/CamClient.cpp

1. 引言

最近遇到了ASD(自动场景检测)在室内光线较好的情况下,会选择到夜间模式,需要调整其阈值。于是分析了MTK平台对于Camera1架构的源码实现流程,梳理了ASD的工作流程(App->framework->jni->HAL),但也仅是对源码流程的一个贯穿,没有对具体实现深入分析。以后若有时间进一步学习,再做补充。

分析思路:

- 先以设置里打开ASD开关为触发点,预览界面/场景检测结果更新为截止点,抓取camera的mtklog;

- 检索log的关键信息,找到App层的入口,分析在上层及framework层所做的操作;

- 检索log的关键信息,找到HAL层的入口,分析在HAL层的事件处理以及消息传递;

- 结合2和3的分析结果,分析framework层与HAL层的jni通信;

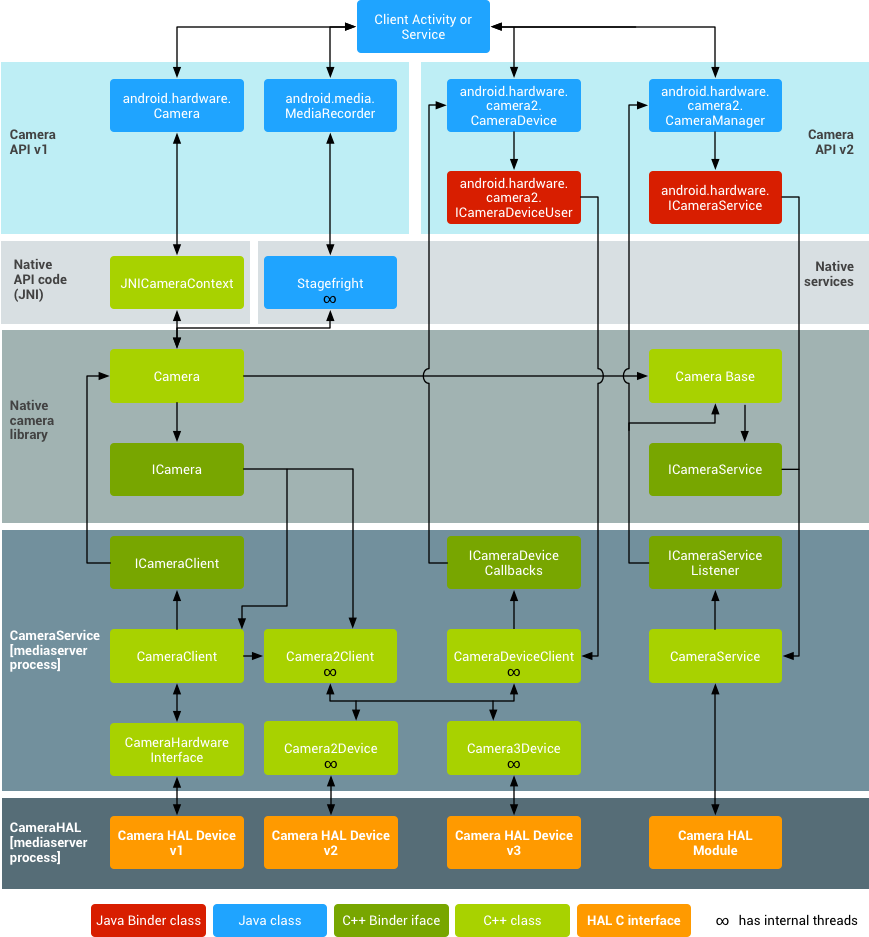

2. 架构

camera架构图如下:

应用框架

应用代码位于应用框架级别,它利用 android.hardware.Camera API 来与相机硬件互动。在内部,此代码会调用相应的 JNI 粘合类,以访问与该相机互动的原生代码。JNI

与 android.hardware.Camera 关联的 JNI 代码位于frameworks/base/core/jni/android_hardware_Camera.cpp。此代码会调用较低级别的原生代码以获取对物理相机的访问权限,并返回用于在框架级别创建 android.hardware.Camera 对象的数据。原生框架

在frameworks/av/camera/Camera.cpp中定义的原生框架可提供相当于 android.hardware.Camera 类的原生类。此类会调用 IPC binder 代理,以获取对相机服务的访问权限。Binder IPC 代理

IPC binder 代理有助于越过进程边界实现通信。调用相机服务的frameworks/av/camera目录中有 3 个相机 binder 类。ICameraService 是相机服务的接口,ICamera 是已打开的特定相机设备的接口,ICameraClient 是返回应用框架的设备接口。相机服务

位于frameworks/av/services/camera/libcameraservice/CameraService.cpp下的相机服务是与 HAL 进行互动的实际代码。HAL

硬件抽象层定义了由相机服务调用且您必须实现以确保相机硬件正常运行的标准接口。内核驱动程序

相机的驱动程序可与实际相机硬件和您的 HAL 实现进行互动。相机和驱动程序必须支持 YV12 和 NV21 图片格式,以便在显示和视频录制时支持预览相机图片。

以上信息引用自:https://source.android.com/devices/camera/ 。接下来顺着Camera1的纵线流程来分析MTK平台上ASD是如何工作的。

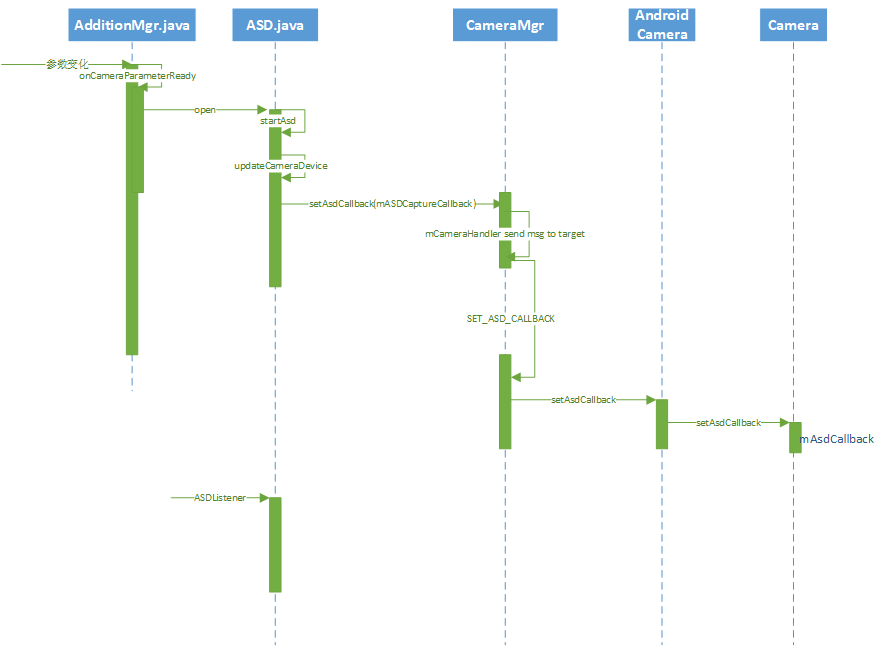

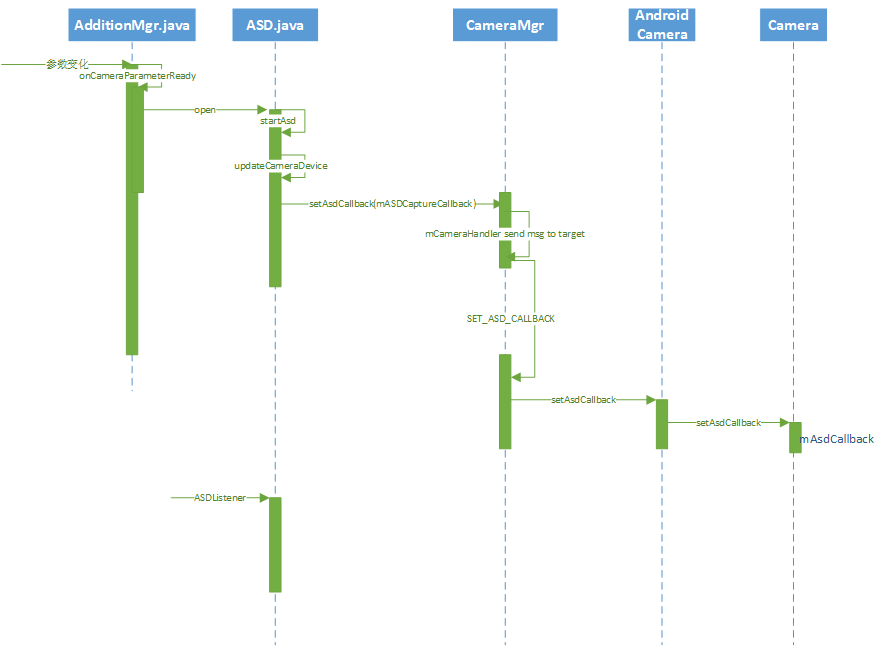

3. 启动流程(App、Framework层)

3.1 启动流程图

接下来,从源码来说说这个流程图。

3.2 AdditionManager.java

1 | public void onCameraParameterReady(boolean isMode) { |

3.3 Asd.java

1 |

|

3.4 CameraManager.java

1 |

|

3.5 AndroidCamera.java

1 | public void setAsdCallback(AsdCallback cb) { |

3.6 android.hardware.Camera.java

1 | /** |

3.7 AsdCallback的创建

1 | // Asd.java的内部类,实现了AsdListener接口 |

4. 处理流程及消息回调(Hardware层)

下面主要讲述在Hardware层,Asd是如何初始化其Client,然后根据camera设备提供的信息进行场景选择,将结果返回给framework层的Camera1,随后传递到上层Camera app的。首先来看看流程图。

4.1 处理流程图

4.2 Hardware层文件说明

- FDClient、AsdClient.cpp : Camera的每个feature好像都有一个专属的Client,每个Client又有专属的Client.Thead线程用来处理各类事件;而Asd的场景列表中有人脸模式(在后续的scene decider时也传入了facenum这样的参数)。这里没有进行深入研究,这里就先暂不拓展,因为整个camera架构还是很大的Orz

- asd_hal.cpp : 该流程的核心成员,主要功能是halASD实例的创建与销毁、ASD的初始化、场景选择。MTK在这里进行ASD各场景的阈值设置,同时分离了一个客制化的配置文件

camera_custom_asd.h,客户可在HalAsdInit初始化的时候读取该客制化文件的值,从而客制化修改ASD场景的阈值; - CameraClient.cpp : 用以接收来自AsdClient的回调消息;

- Camera.cpp : 上报回调消息到jni层;

- android_hardware_Camera.cpp : jni层的实现

4.3 FD.Client.Thread.cpp

1 | // PreviewClient的state发生变化时会发送eID_WAKEUP消息 |

4.4 AsdClient.cpp

1 | void |

4.5 asd_hal.cpp

Asd初始化:

1 | MINT32 halASD::mHalAsdInit(void* AAAData,void* working_buffer,MUINT8 SensorType, MINT32 Asd_Buf_Width, MINT32 Asd_Buf_Height) |

Asd场景检测:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27MINT32 halASD::mHalAsdDecider(void* AAAData,MINT32 Face_Num,mhal_ASD_DECIDER_UI_SCENE_TYPE_ENUM &Scene)

{

MINT32 Retcode = S_ASD_OK;

AAA_ASD_PARAM* rASDInfo=(AAA_ASD_PARAM*)AAAData;

ASD_DECIDER_RESULT_STRUCT MyDeciderResult;

ASD_SCD_RESULT_STRUCT gMyAsdResultInfo;

ASD_DECIDER_INFO_STRUCT gMyDeciderInfo;

...

//设置gMyDeciderInfo的Fd(人脸检测)、3A(AE&AWB&AF)信息

...

// 这里的m_pMTKAsdObj在Asd init的时候创建了,用以调用MTK底层库封装的函数

// AsdFeatureCtrl、AsdMain这两个函数的实现是在/vendor/xxx/libs中

// 可以通过 grep 关键字 来检索

m_pMTKAsdObj->AsdFeatureCtrl(ASD_FEATURE_GET_RESULT, 0, &gMyAsdResultInfo);

memcpy(&(gMyDeciderInfo.InfoScd),&gMyAsdResultInfo, sizeof(ASD_SCD_RESULT_STRUCT));

m_pMTKAsdObj->AsdMain(ASD_PROC_DECIDER, &gMyDeciderInfo);

m_pMTKAsdObj->AsdFeatureCtrl(DECIDER_FEATURE_GET_RESULT, 0, &MyDeciderResult);

MY_LOGD("[mHalAsdDecider] detect Scene is %d, Face Num:%d \n",MyDeciderResult.DeciderUiScene, gMyDeciderInfo.InfoFd.FdFaceNum);

// 得到场景检测的结果

Scene=(mhal_ASD_DECIDER_UI_SCENE_TYPE_ENUM) MyDeciderResult.DeciderUiScene;

//Scene=mhal_ASD_DECIDER_UI_AUTO;

return Retcode;

}

4.6 CameraClient.cpp

首先先来说说AsdClient中提到的mNotifyCb函数,这个函数是在CameraClient的initialize函数中被定义的。我们来看看代码:1

2

3

4

5

6

7

8

9

10

11status_t CameraClient::initialize(CameraModule *module) {

...

mHardware = new CameraHardwareInterface(camera_device_name);

...

//调用的是Cam1DeviceBase的setCallback()函数

mHardware->setCallbacks(notifyCallback,

dataCallback,

dataCallbackTimestamp,

(void *)(uintptr_t)mCameraId);

...

}

接下来我们看看Cam1DeviceBase setCallback函数的实现:

1 | // Set the notification and data callbacks |

刚才我们分析到AsdClient在进行场景检测得到结果后,调用了mNotifyCb函数;根据上面的分析,mNotifyCb函数指向CameraClient的notifyCallback函数,来看看该函数的代码:

1 | // Callback messages can be dispatched to internal handlers or pass to our |

mNotifyCb函数调用传入的msgType是MTK_CAMERA_MSG_EXT_NOTIFY ,那么接下来会执行到handleMtkExtNotify,该函数在Mediatek自己写的CameraClient.cpp中实现。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28void CameraClient::handleMtkExtNotify(int32_t ext1, int32_t ext2)

{

int32_t const extMsgType = ext1;

switch (extMsgType)

{

case MTK_CAMERA_MSG_EXT_NOTIFY_CAPTURE_DONE:

handleMtkExtCaptureDone(ext1, ext2);

break;

//

case MTK_CAMERA_MSG_EXT_NOTIFY_SHUTTER:

handleMtkExtShutter(ext1, ext2);

break;

//

case MTK_CAMERA_MSG_EXT_NOTIFY_BURST_SHUTTER:

handleMtkExtBurstShutter(ext1, ext2);

break;

case MTK_CAMERA_MSG_EXT_NOTIFY_CONTINUOUS_SHUTTER:

handleMtkExtContinuousShutter(ext1, ext2);

break;

case MTK_CAMERA_MSG_EXT_NOTIFY_CONTINUOUS_END:

handleMtkExtContinuousEnd(ext1, ext2);

break;

//

default:

handleGenericNotify(MTK_CAMERA_MSG_EXT_NOTIFY, ext1, ext2);

break;

}

}

传入的ext1为MTK_CAMERA_MSG_EXT_NOTIFY_ASD,所以又走回Android原生 CameraClient 的handleGenericNotify函数:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26void CameraClient::handleGenericNotify(int32_t msgType,

int32_t ext1, int32_t ext2) {

//!++

#ifdef MTK_CAM_FRAMEWORK_DEFAULT_CODE

//!--

sp<hardware::ICameraClient> c = mRemoteCallback;

//!++

#else

sp<hardware::ICameraClient> c;

{

Mutex::Autolock remoteCallbacklock(mRemoteCallbackLock);

c = mRemoteCallback;

}

#endif

//!--

//!++ There some dead lock issue in camera hal, so we need to disable this function before all dead lock issues hase been fixed.

#ifdef MTK_CAM_FRAMEWORK_DEFAULT_CODE

mLock.unlock();

#endif

//!--

// 除了notifyCallback这种方式,在看Preview流程时还有一种方式是

// copy当前帧然后把copy后的数据直接发送出去

if (c != 0) {

c->notifyCallback(msgType, ext1, ext2);

}

}

由上可知消息是通过1

2sp<hardware::ICameraClient> c = mRemoteCallback;

c->notifyCallback(msgType, ext1, ext2);

的方式发送出去的。

那这个mRemoteCallback又是在哪里初始化的呢?检索了一下,是在CameraClient::connect中被赋值了,继续跟踪下去,会发现是在CameraClient的构造函数里初始化的,这里涉及到了多层继承(CameraClient->CameraService->Client),看看代码:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35step1:

CameraClient::CameraClient(const sp<CameraService>& cameraService,

const sp<hardware::ICameraClient>& cameraClient, // 这里传入了一个ICameraClient

const String16& clientPackageName,

int cameraId, int cameraFacing,

int clientPid, int clientUid,

int servicePid, bool legacyMode):

// 这里开始调用Client的构造函数,传入一个cameraClient参数

// Client是CameraService底下的一个内部类

Client(cameraService, cameraClient, clientPackageName,

cameraId, cameraFacing, clientPid, clientUid, servicePid)

step2:

CameraService::Client::Client(const sp<CameraService>& cameraService,

const sp<ICameraClient>& cameraClient,

const String16& clientPackageName,

int cameraId, int cameraFacing,

int clientPid, uid_t clientUid,

int servicePid) :

CameraService::BasicClient(cameraService,

IInterface::asBinder(cameraClient),

clientPackageName,

cameraId, cameraFacing,

clientPid, clientUid,

servicePid)

{

int callingPid = getCallingPid();

LOG1("Client::Client E (pid %d, id %d)", callingPid, cameraId);

// 这里对mRemoteCallback进行了赋值初始化

mRemoteCallback = cameraClient;

cameraService->loadSound();

LOG1("Client::Client X (pid %d, id %d)", callingPid, cameraId);

}

那么思路就清晰了,我们只需要看一下这个CameraClient进行了初始化就可以了。从Camera的connect方法看一下:

1 | step 1: |

所以,使用的c->notifyCallback调用的是Camera::notifyCallback。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18// callback from camera service

void Camera::notifyCallback(int32_t msgType, int32_t ext1, int32_t ext2)

{

return CameraBaseT::notifyCallback(msgType, ext1, ext2);

}

template <typename TCam, typename TCamTraits>

void CameraBase<TCam, TCamTraits>::notifyCallback(int32_t msgType, int32_t ext1, int32_t ext2)

{

sp<TCamListener> listener;

{

Mutex::Autolock _l(mLock);

listener = mListener;

}

if (listener != NULL) {

listener->notify(msgType, ext1, ext2);

}

}

这里的mListener是在android_hardware_Camera.cpp被设置,接下来我们进入到JNI层(终于快到Java层了^^)。

5. 消息上报(jni层)

首先我们先看一下mListener的设置代码:

1 | static jint android_hardware_Camera_native_setup(...) |

所以listener->notify调用的是MtkJNICameraContext::notify函数:1

2

3

4

5

6

7

8

9

10

11

12

13

14void JNICameraContext::notify(int32_t msgType, int32_t ext1, int32_t ext2)

{

ALOGV("notify");

...

// 这里的post_event在android_hardware_Camera.cpp中有被定义postEventFromNative()

// 所以会调用到frameworks/base/core/java/android/hardware/Camera.java的

// postEventFromNative方法

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, msgType, ext1, ext2, NULL);

}

jclass clazz = FindClassOrDie(env, "android/hardware/Camera");

fields.post_event = GetStaticMethodIDOrDie(env, clazz, "postEventFromNative",

"(Ljava/lang/Object;IIILjava/lang/Object;)V");

那么我们来看一下Camera.java中做了什么操作:

1 | private static void postEventFromNative(Object camera_ref, |

ok,到这里消息在JNI层是如何被处理、上报到framework再到App就结束了。